Augmented reality is one of those impressive technologies that hasn’t seen a great deal of impressive application, despite it’s maturity and potential. To their credit BBDO Singapore developed a bold creative vision using AR for a major product launch. Singtel’s Dash mobile app, through a partnership with Standard Chartered bank, was to replace cash in Singapore for retail, taxis and between friends as well as putting several other financial services at your fingertips.

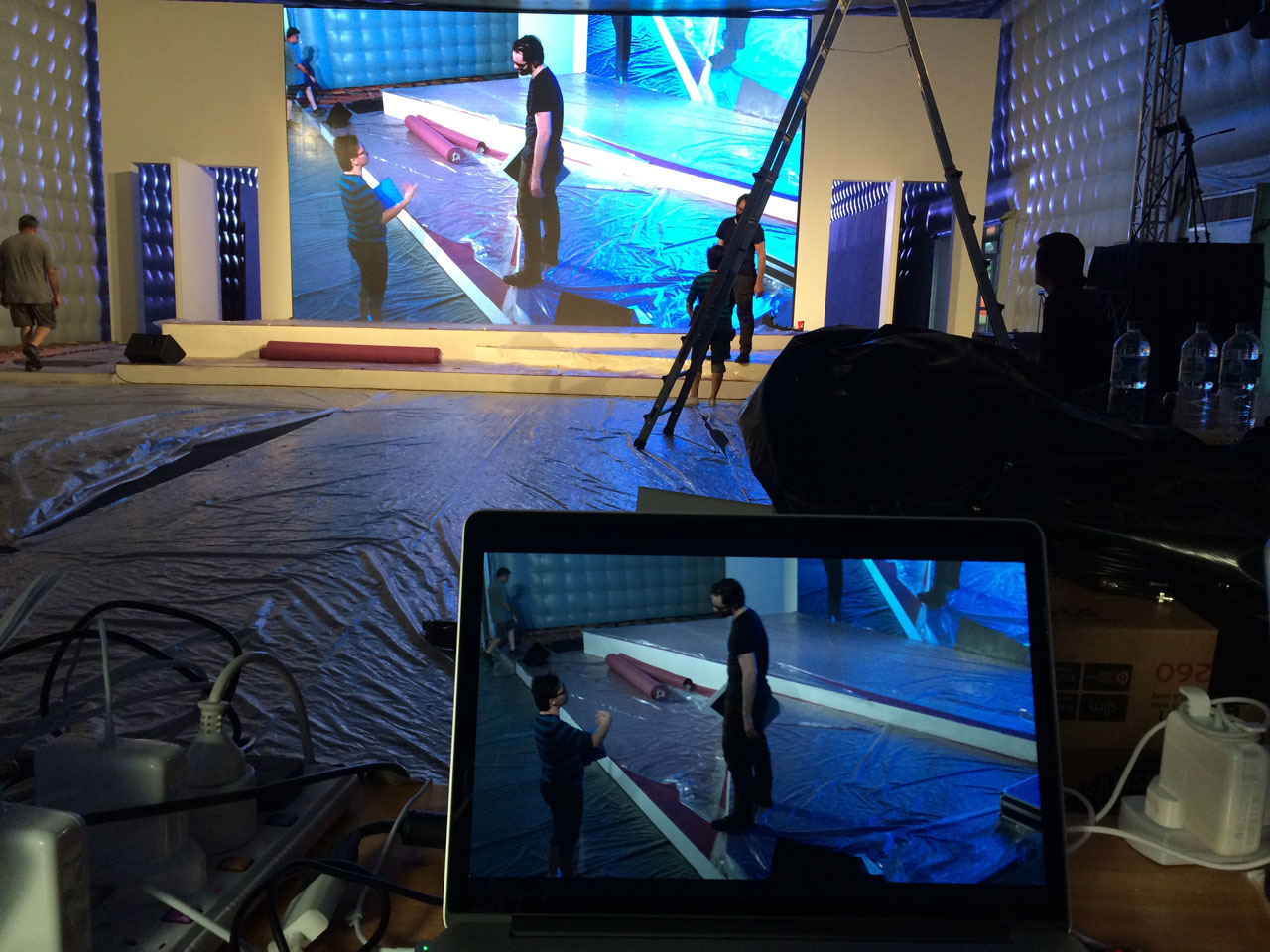

We developed software and content for a live augmented reality presentation launching Dash and demonstrating all of it’s features to an audience of over 200 members of the press, commentators, shareholders and executives. The event was also covered by several camera crews for various TV and internet outlets.

This complex project was a great technical, logistical and creative challenge. We needed to meticulously design and plan our execution to accommodate many moving parts both human and technological. All the variability that comes with a live show with the added challenges of AR that makes the digital content highly dependant on these real-world variables; the lighting, stage dimensions, audience perspective and MC delivery. What’s more, because the venue was being constructed specially for this show we would only be able to test the system on-site for a couple of days before the event. The solution had to be intelligent and flexible. It only had to work once, but it had to work perfectly.

May 2014

Clients: Method, BBDO, Singtel

The Live Show

Production

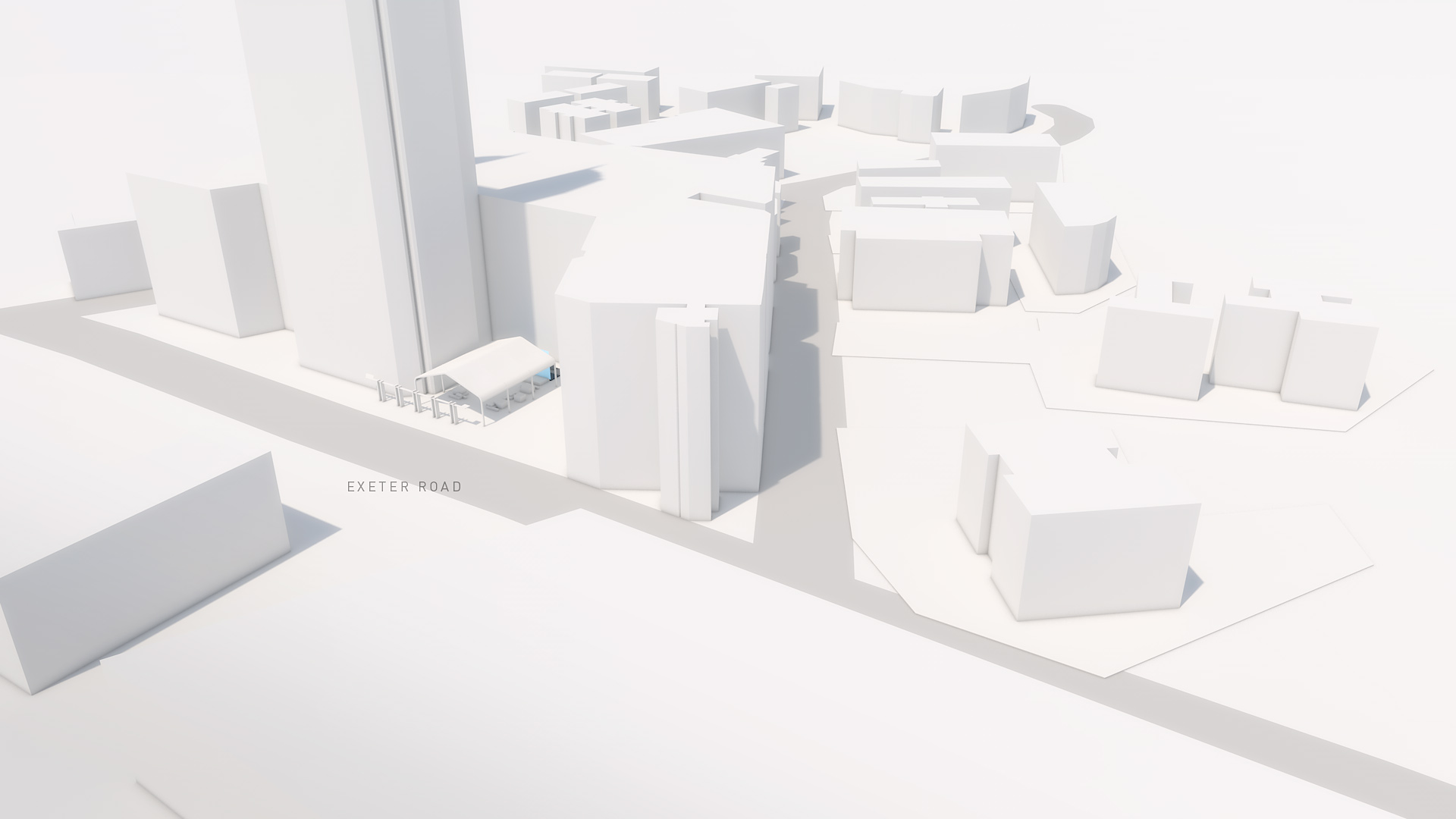

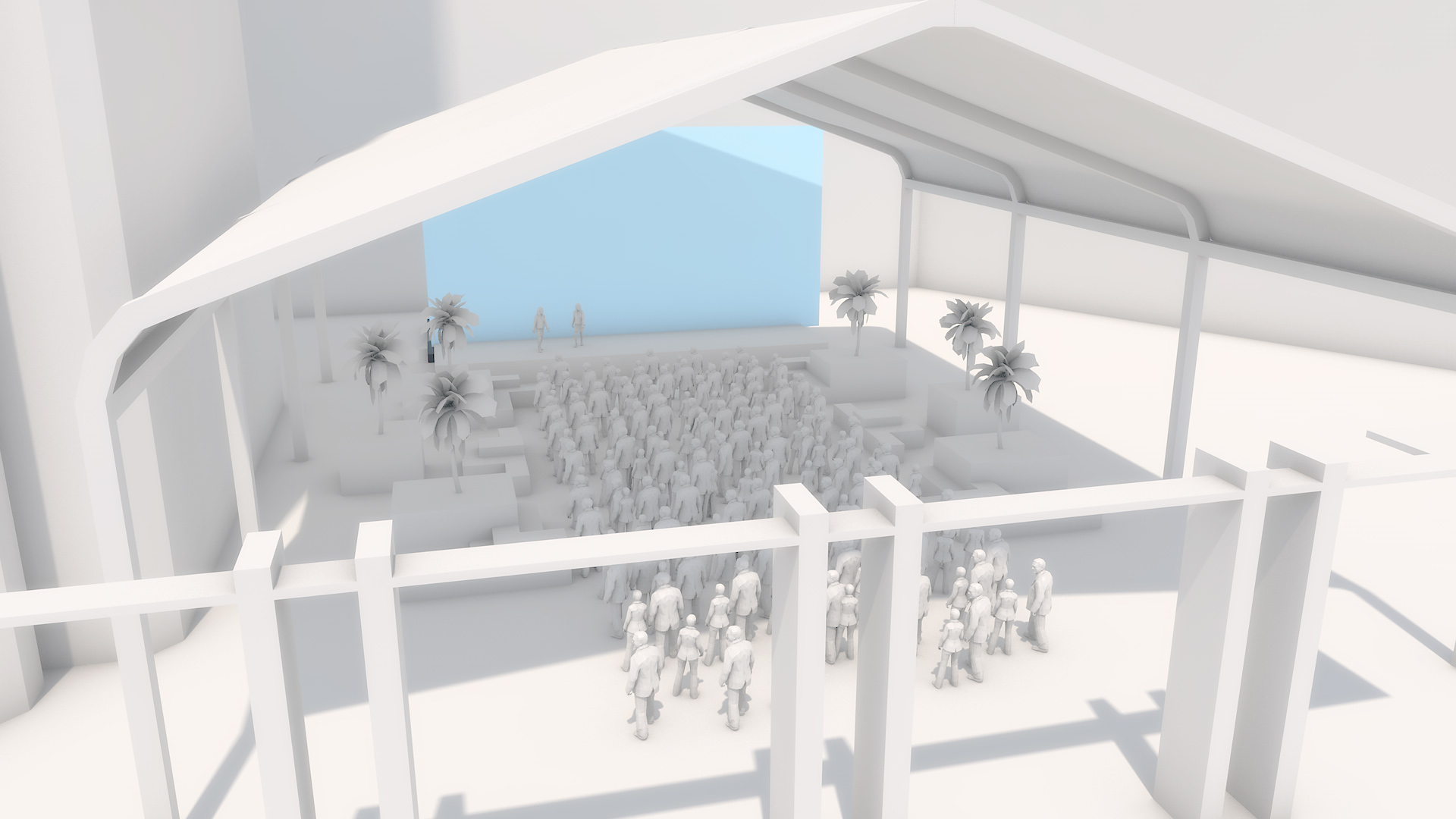

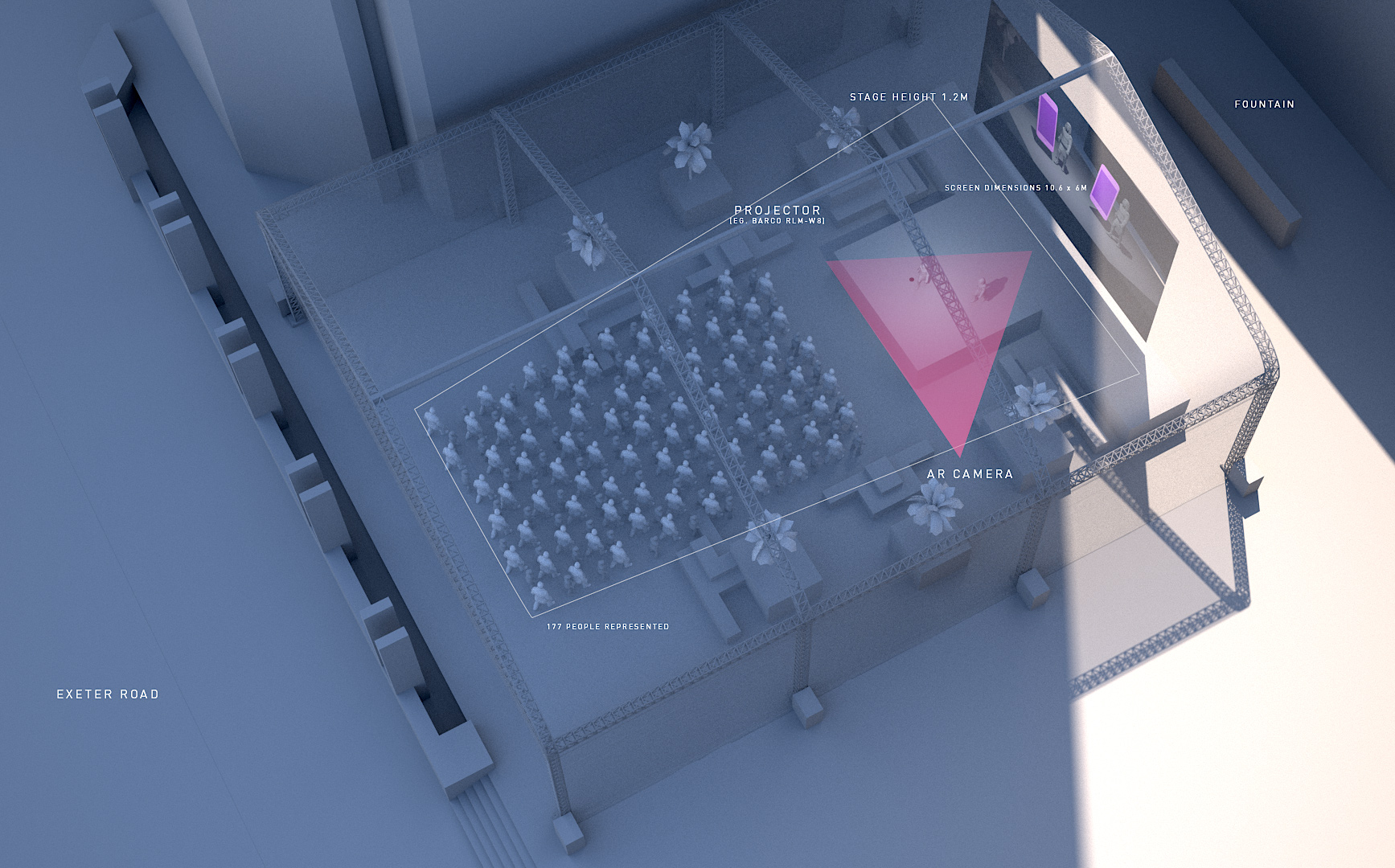

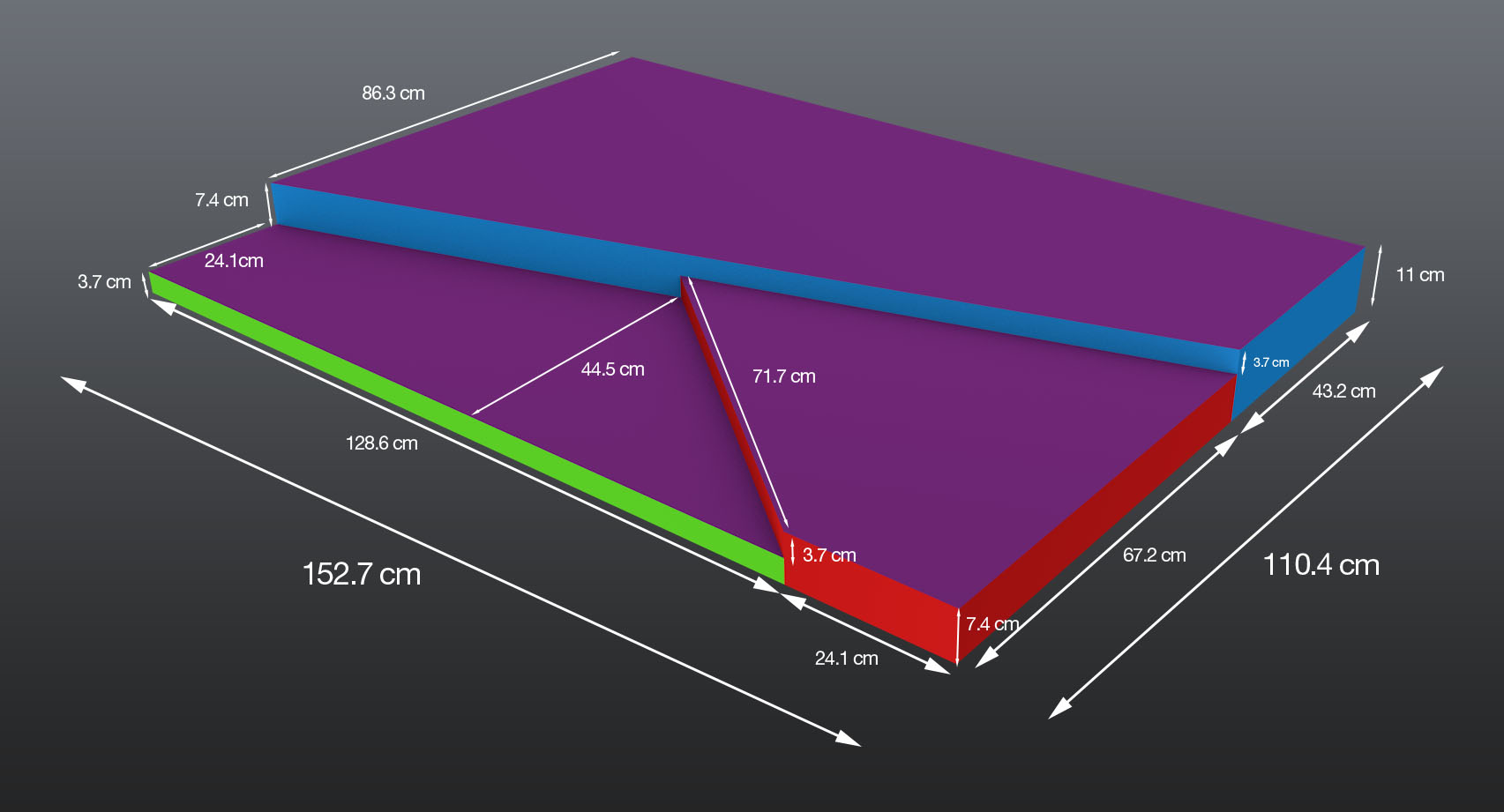

AR is a fairly difficult concept for the uninitiated to wrap their heads around, and we always want to make sure our client’s are comfortable by helping them understand the technology being applied to achieve the creative vision. Detailed mockups were presented based off plans we acquired from the staging company that showed how the show would appear from the perspective of an actual audience member.

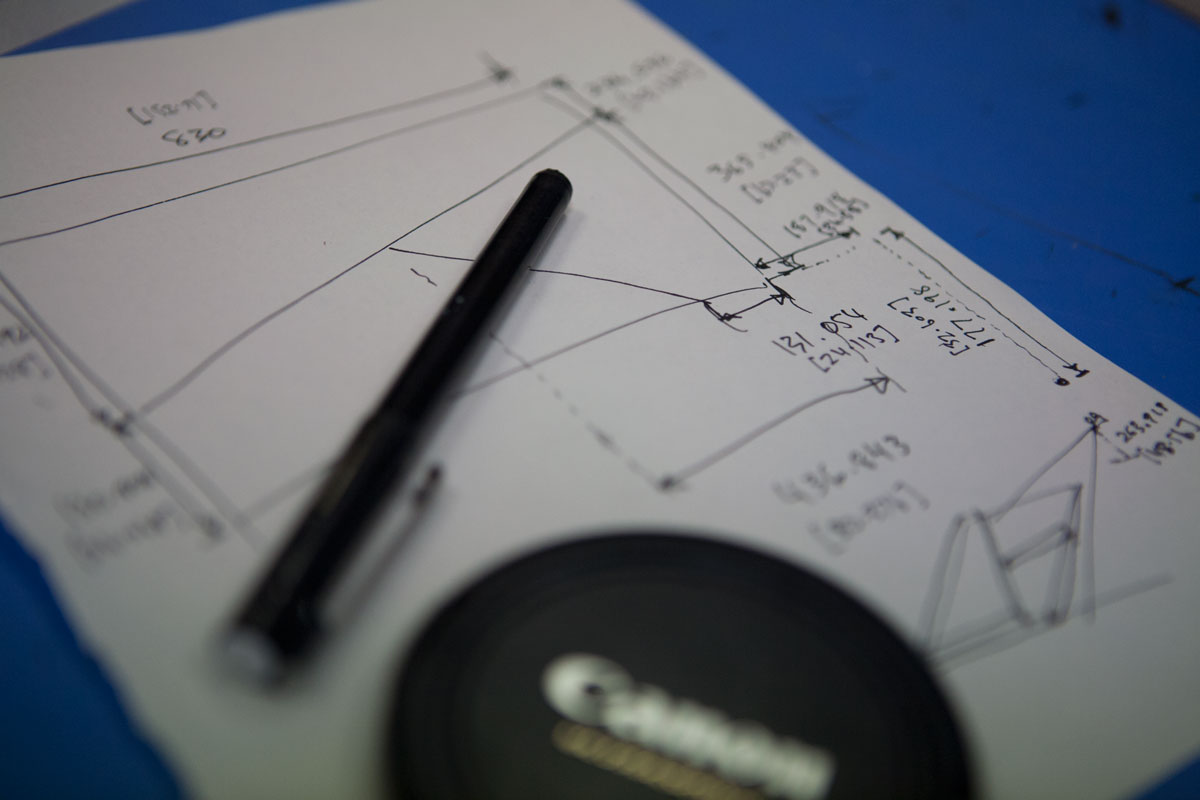

We developed a precise camera simulation that allowed us to calculate exactly where our camera would need to be positioned and provide this information to the staging company early on. The location, tent and stage design were changed at different points in production, and this mockup system allowed us to verify for the staging company that the changes could still accommodate the client and agency’s vision and deliver an excellent experience for the audience.

Scaling Down

Testing at small scale is often crucial when developing physical installations, especially when the performance of the system must be 100% guaranteed. We commandeered the ping-pong table in our studio to set up an exact 5.435 : 1 scale testbed. Later we developed 3D plans of the final stage design and had a scale replica cut by a CNC machine from foam. This further bolstered the client’s confidence and ensured that all stakeholders had a clear picture of how the show was to look.

The scale setup allowed us to test almost all aspects of the system and deliver WIPs to the client that clearly showed what the final show experience would be, and how each of BBDO’s storyboards translated into the various AR scenes. These WIPs also included several complete run-through’s of the entire script with Iain’s smooth baritone and puppeteering giving life to our moustachioed scale model of Rosh Gidwani.

Content

All content for the show we produced in-house including modelling and animation. Realflow was used to achieve the fluid purple splash effect featured at the beginning and end of the show. A set of physically-based shaders were developed that were used on all the 3D objects to give to give a realistic and consistent feel

Technology

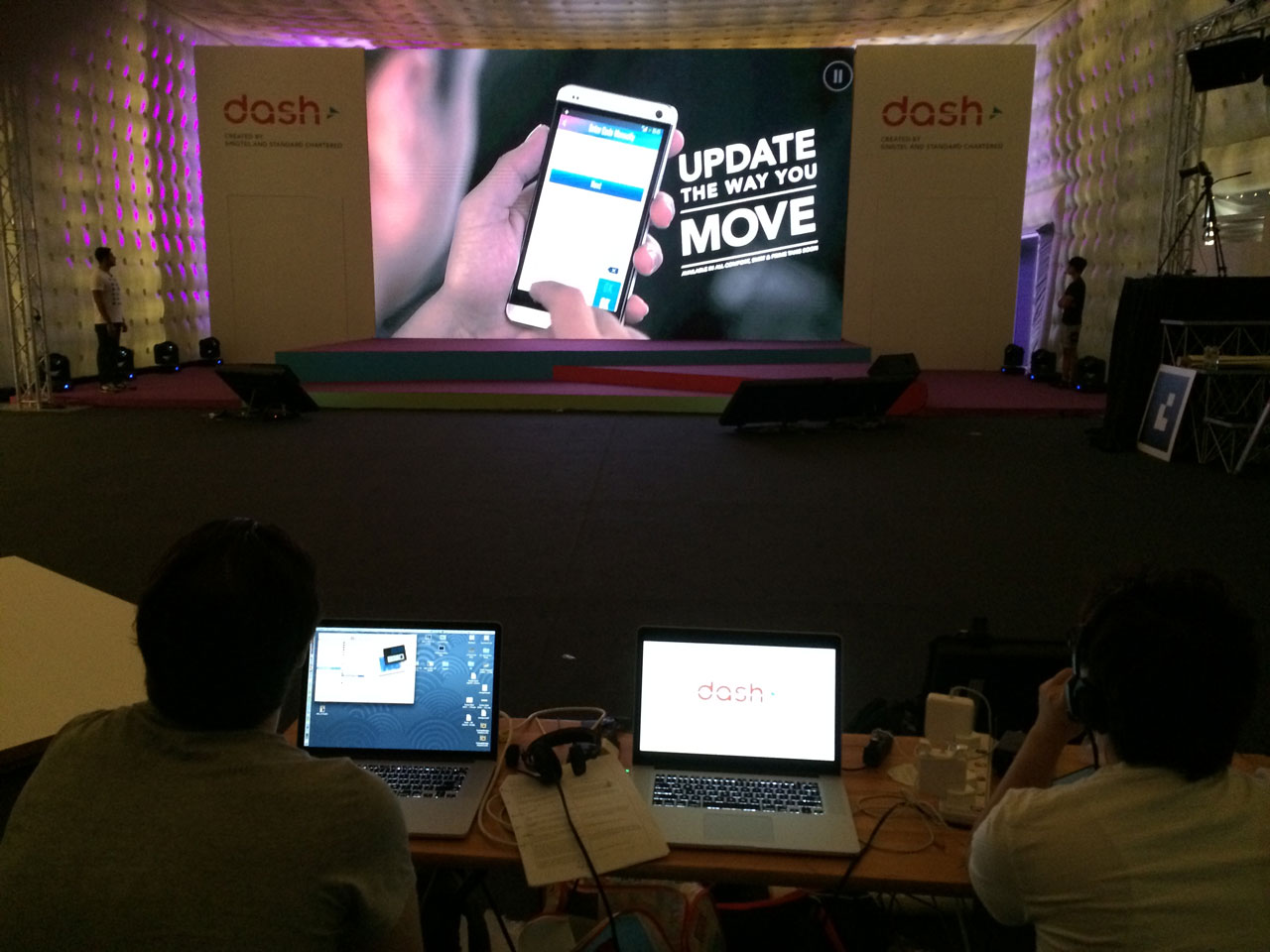

The BlackMagic Cinema Camera was chosen for it’s performance, small size, HD-SDI Output, and (unlike most high-quality video cameras) ability to run for hours. This amazing camera really got stress tested during our rehearsals in Singapore which took place before the air-conditioning was installed, running continuously without a hitch for entire days in 38°C heat! The lens was a Canon 16-35 at full wide as calculated in our camera simulation. This focal length allowed the camera platform to be positioned in a location that wouldn’t obstruct any seating while still taking in the entire stage.

A 30m SDI cable ran from the Cinema Camera to the back of house, connecting into a Black Magic DeckLink which in turn went into a MacBook Pro running our software which read the video stream from the DeckLink via Apple’s native QuickTime interfaces. All this gave us the high-performance we needed to pull in film-quality 1080p live video and process it for AR.

We worked closely with ARToolWorks who were excellent technology partners supplying the underlying AR processing library and giving us custom builds that allowed processing full 1080p video at high frame-rates.

Additional simulation of the LED wall pixel stride from the audience perspective was done to specify minimum resolution / screen size to the staging company. In particular this ensured the AR phone screens were showing the functions of the Dash app would be legible. Our software incorporated a number of features to deal with various unknowns / failures and ensure the live show would go off without a hitch.

Stage Alignment

We developed a special shader that cast shadows of the different 3D objects onto an invisible 3D model of the stage. This gave the effect of shadows being cast onto the physical stage itself and made the AR illusion much more compelling. But for this shadow effect to work, our invisible 3D model of the stage needed to be aligned exactly with the image of the actual stage. This alignment was also important for the overall AR effect since the 3D scene generated by our software had to also match exactly the camera’s perspective of the real stage. By tracking an AR glyph placed on the stage at a known location the software could instantly calibrate it’s internal 3D camera orientation to match that of the real world camera’s viewpoint. Our 3D stage model was also fully skinned so we could make adjustments to account for the slight variance between the plans and the real stage (which was built by carpenters on-site).

Marker Tracking Reliability

AR tracking, like all computer vision algorithms, is sensitive to lighting conditions. We added filtering of the marker position and other code that made the tracking highly robust against lost markers. The venue was a large tent with a slightly translucent roof which meant lighting conditions inside could vary slightly depending on cloud cover and time-of-day. Our calibration process allowed for adjusting the ambient light level used by the tracking algorithm. To account for changes in stage light intensity across the stage itself we supported different light level settings depending on where the glyph was on the stage (for instance, despite working the with lighting crew and using the most diffuse stock available to print the glyphs, one point on the stage caused a specular reflection off the glyph into the camera). All this added up to an extremely reliable tracking system that worked perfectly from rehearsal to final performance.

We also tested how the system responded to camera flashes and adjusted to filtering to handle the resultant overexposure in the video stream. Through careful optimisation we were able to process the video stream at well over 60Hz which also made the tracking much more robust.

Failsafe System

We ran an exact duplicate system in parallel which replicated the main system with a second DeckLink and MacBook pro. In the event of a catastrophic system failure (software or hardware crash) we would signal the stage manager via intercom to instantly switch vision and audio to the backup system. To the audience it would appear as if nothing went wrong.